Meta Avatar

このアドオンは、MetaアバターとFusionとのインテグレーション方法を示します。

主な焦点は、以下の通りです。

- Fusionのネットワーク変数を使用してアバターを同期する

- Photon Voiceとリップシンクとのインテグレーション

Meta XR SDK

他のサンプルで使用されているOpenXRプラグインのかわりに、ここではOculus XRプラグインを使用しています。

Meta XR SDKは、MetaのScoped Registry https://npm.developer.oculus.com/ に追加されています。(詳細はMeta documentationを参照)

Metaからインストールしたパッケージには以下が含まれます。

- Meta XR Core SDK

- Meta XR Platform SDK:Oculus User IDへアクセスして、Metaアバターのロードするために必要です。

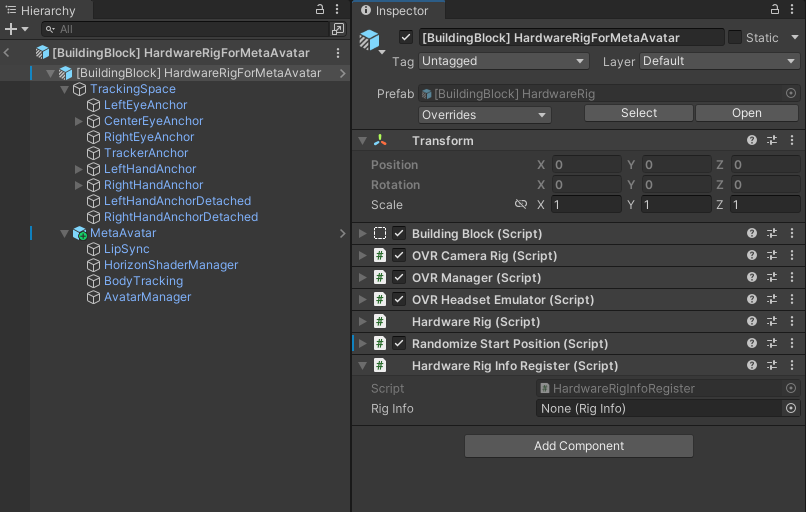

Oculusリグとビルディングブロック

OpenXRプラグインのかわりにOculus XRプラグイン使用する場合は、ヘッドセットと手の位置をキャプチャするために特定のリグが作成されます。

このハードウェア収集リグは、Meta building blocksから作成されます。

- このステップで作成されるプレハブは、

MetaOVRHandsSynchronizationアドオンの/Prefabs/Rig/BaseBuildingBlocks/[BuildingBlock] BaseRigプレハブから利用可能です - アドオンで実際に使用するプレハブは、同期コンポーネントが追加されていて、

/Prefabs/Rig/[BuildingBlock] HardwareRigForMetaAvatarプレハブから利用可能です

MetaAvatarゲームオブジェクトには、Metaアバターを正しく機能させるために、必要なコンポーネントがすべてまとめて追加されています。

LipSyncはOVRAvatarLipSyncContextコンポーネントを持ちます:リップシンク機能をセットアップするためにOculus SDKから提供されます。BodyTrackingはSampleInputManagerコンポーネントを持ちます:これはOculus SDK(Asset/Avatar2/Example/Common/Scripts)にあります。OvrAvatarInputManager基底クラスを継承していて、アバターのトラッキング入力を設定するためにOVR Hardware Rigを参照します。AvatarManagerはOVRAvatarManagerコンポーネントを持ちます:Metaアバターをロードするために使用されます。

Runner

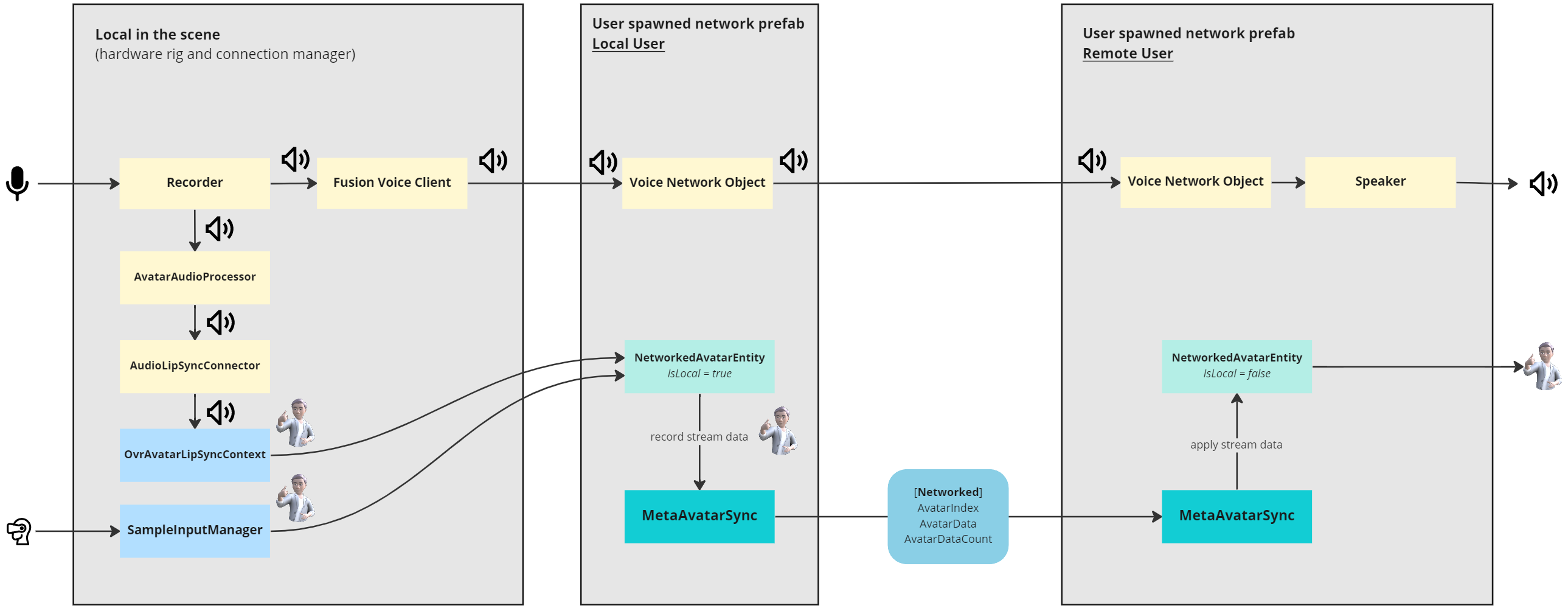

RunnerゲームオブジェクトにあるConnectionManagerは、OnPlayerJoinedコールバックが呼び出された時に、Photon Fusionのサーバーへの接続とユーザーのネットワークプレハブのスポーンを制御します。

また、ネットワークを通して音声をストリームするためには、Fusion Voice Clientが必要です。recorderフィールドは、RunnerにあるRecorderゲームオブジェクトを参照しています。

FusionとPhoton Voiceとのインテグレーションの詳細は以下のページをご覧ください:Voice - Fusion Integration

Runnerゲームオブジェクトは、MetaAvatarMicrophoneAuthorizationコンポーネントによって、マイクの権限周りも扱います。マイクアクセスが付与された時に、Recorderオブジェクトを有効にします。

Recorderは、マイクの接続を扱います。これに含まれるAudioLipSyncConnectorコンポーネントは、Recorderからオーディオストリームを受け取って、OVRAvatarLipSyncContextに渡します。

ユーザーのネットワークプレハブのスポーン

ConnectionManagerは、OnPlayerJoinedコールバックが呼び出された時に、ユーザーのネットワークプレハブNetworkRigWithOVRHandsMetaAvatar Variantをスポーンします。

このプレハブは以下を含みます。

MetaAvatarSync:開始時にランダムなアバターを選択して、ネットワークを通してアバターをストリーミングします。NetworkedAvatarEntity:OculusのOvrAvatarEntityを継承しています。ネットワークリグがローカルユーザーかリモートユーザーかによって、アバターを調整するために使用されます。

アバターの同期

概要

MetaAvatarSyncクラスは、アバター同期の自動化を制御します。

ConfigureAsLocalAvatar()メソッドによって、ローカルユーザーでユーザーのネットワークプレハブがスポーンした時、関連するNetworkAvatarEntityが以下のデータを受け取ります。

OvrAvatarLipSyncContextから、リップシンクSampleInputManagerから、ボディートラッキング

ネットワーク変数によって、データがネットワークを通してストリームされます。

リモートユーザーでユーザーのネットワークプレハブがスポーンした時は、MetaAvatarSyncのConfigureAsRemoteAvatar()が呼び出され、関連するNetworkAvatarEntityクラスがストリームされたデータからアバターの構築とアニメーションを行います。

アバターモード

MetaAvatarSyncは、2つのモードに対応しています。

- UserAvatar:ユーザーのMetaアバターをロードする

- RandomAvatar:ランダムなMetaアバターをロードする

そのため、ローカルユーザーのネットワークプレハブがスポーンした時、設定によってアバターが選択されます。

C#

public override void Spawned()

{

base.Spawned();

if (Object.HasInputAuthority)

{

LoadLocalAvatar();

}

else

{

if (!avatarConfigured)

{

ConfigureAsRemoteAvatar();

}

}

changeDetector = GetChangeDetector(ChangeDetector.Source.SnapshotFrom);

// Trigger initial change if any

OnUserIdChanged();

ChangeAvatarIndex();

}

async void LoadLocalAvatar()

{

if (avatarMode == AvatarMode.UserAvatar)

{

// Make sure to download the user id

ConfigureAsLocalAvatar();

UserId = await avatarEntity.LoadUserAvatar();

}

else

{

ConfigureAsLocalAvatar();

AvatarIndex = UnityEngine.Random.Range(0, 31);

avatarEntity.LoadZipAvatar(AvatarIndex);

}

}

AvatarIndexはネットワーク変数のため、変更された値はChangeDetectorによってすべてのプレイヤーで更新されます。

C#

[Networked]

public int AvatarIndex { get; set; } = -1;

ChangeDetector changeDetector;

C#

public override void Render()

{

base.Render();

foreach (var changedPropertyName in changeDetector.DetectChanges(this))

{

if (changedPropertyName == nameof(UserId)) OnUserIdChanged();

...

}

}

アバターのデータ

ハードウェアリグのSampleInputManagerコンポーネントは、ユーザーの動きを追跡します。

プレイヤーのネットワークリグがローカルユーザーの場合は、NetworkedAvatarEntityに参照されます。

この設定はMetaAvatarSync(ConfigureAsLocalAvatar())で行われます。

LateUpdate()毎に、MetaAvatarSyncはローカルプレイヤーのアバターデータをキャプチャします。

C#

private void LateUpdate()

{

// Local avatar has fully updated this frame and can send data to the network

if (Object.HasInputAuthority)

{

CaptureAvatarData();

}

}

CaptureLODAvatarメソッドは、アバターのストリームバッファを取得して、AvatarDataと呼ばれるネットワーク変数にコピーします。

容量は、MetaアバターのMediumかHighのLODをストリームするのに十分な、1200に制限されています。(実用的な設定では、実際に必要なデータ量に合わせて、無駄なメモリ確保を回避します)

説明を簡単にするため、このサンプルではMedium LODのみをストリームしていることに注意してください。

バッファサイズAvatarDataCountも、ネットワークを通して同期されます。

C#

[Networked, Capacity(1200)]

public NetworkArray<byte> AvatarData { get; }

[Networked]

public uint AvatarDataCount { get; set; }

アバターのストリームバッファが更新された時、リモートユーザーにはそれが通知されるので、リモートプレイヤーを表すネットワークリグに受信したデータを適用します。

C#

public override void Render()

{

base.Render();

foreach (var changedPropertyName in changeDetector.DetectChanges(this))

{

...

if (changedPropertyName == nameof(AvatarData)) ApplyAvatarData();

}

}

個別のMetaアバターのローディング

ローカルユーザーアバターのローディング

先ほど見たように、ユーザーのMetaアバターをロードするには、AvatarModeはAvatarMode.UserAvatarに設定されている必要があります。

Spawnedコールバックで、LoadUserAvatar()からNetworkedAvatarEntityはユーザーアカウントのアバターを問い合わせます。

C#

/// <summary>

/// Load the user meta avatar based on its user id

/// Note: _deferLoading has to been set to true for this to be working

/// </summary>

public async Task<ulong> LoadUserAvatar()

{

// Initializes the OVR PLatform, then get the user id

await FinOculusUserId();

if(_userId != 0)

{

// Load the actual avatar

StartCoroutine(Retry_HasAvatarRequest());

}

else

{

Debug.LogError("Unable to find UserId.");

}

return _userId;

}

このメソッドはMetaのアカウントユーザーIDを返し、それはネットワーク変数のUserIdに保存されるので、ユーザーIDはすべてのクライアント間で同期されます。

C#

[Networked]

public ulong UserId { get; set; } = 0;

ユーザーのアバターをロードできるようにするには、以下の点に注意してください。

- プレイヤープレハブの

NetworkedAvatarEntityコンポーネントで、Defer Loadingをtrueに設定してください。開始時にアバターが自動的にロードされることを防ぎます。

リモートユーザーアバターのローディング

UserIdを受け取ると、リモートユーザーはそのIDに関連したアバターのダウンロードをトリガーします。

C#

public override void Render()

{

base.Render();

foreach (var changedPropertyName in changeDetector.DetectChanges(this))

{

if (changedPropertyName == nameof(UserId)) OnUserIdChanged();

...

}

}

void OnUserIdChanged()

{

if(Object.HasStateAuthority == false && UserId != 0)

{

Debug.Log("Loading remote avatar: "+UserId);

avatarEntity.LoadRemoteUserCdnAvatar(UserId);

}

}

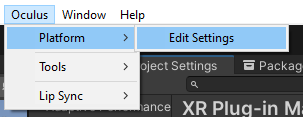

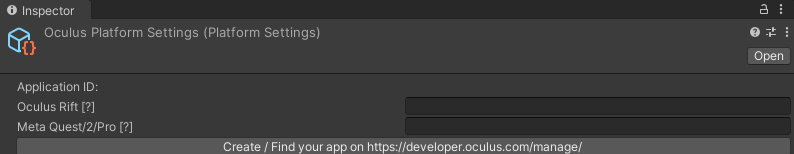

Metaアバターへのアクセス

Metaアバターをロードできるようにするには、UnityのメニューOculus > Platform > Edit Settingsから、アプリケーションのApp Idを追加する必要があります。

このApp IDは、MetaダッシュボードのAPI > App Idフィールドにあります。

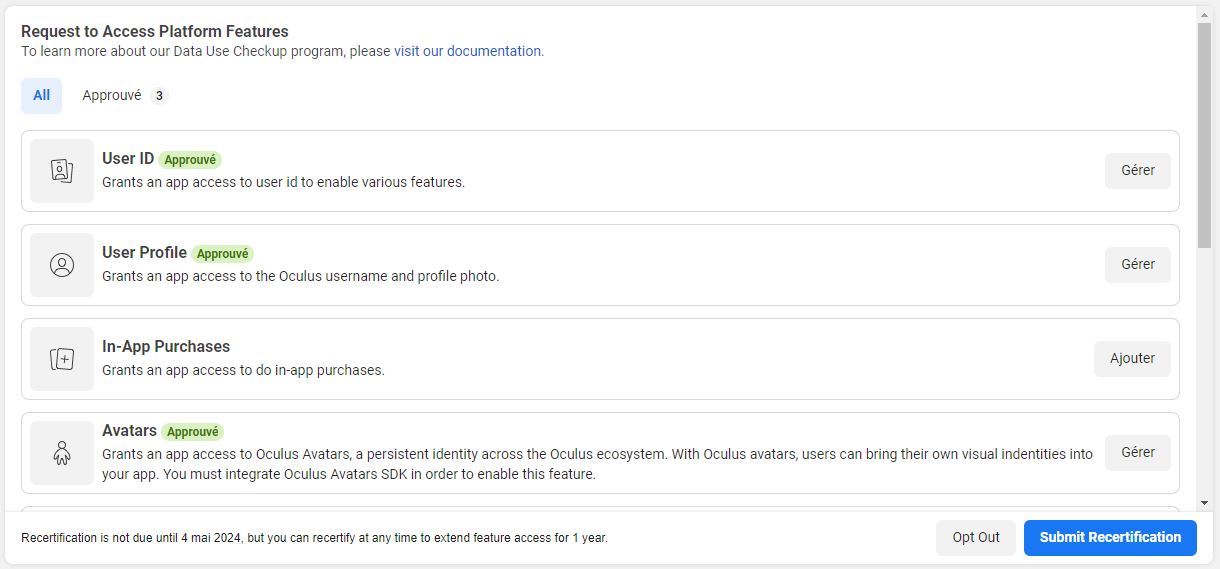

また、アプリケーションは、Data use checkupセクションを完了している必要があり、User Id・User profile・Avatarsアクセスが必要です。

ユーザーアバターのテスト

開発中にローカルのMetaアカウントに関連したアバターを見られるようにするには、Oculus Platform SettingsからローカルユーザーアカウントをApp Idに関連した組織のメンバーにする必要があります。

クロスプラットフォーム(Questビルドとデスクトップビルド)でアバターを見るには、以下のページのGroup App IDs Togetherチャプターで指定されるように、QuestとRiftのアプリケーションをグループ化する必要があります。Configuring Apps for Meta Avatars SDK

リップシンク

Photon Voice Recorderでマイクの初期化が行われます。

OVRHardwareRigのOvrAvatarLipSyncContextは、オーディオバッファを転送するために直接呼び出されることを想定して調整されます。

Recorderから読み取ったオーディオをフックするクラスは、後述するように、オーディオをOvrAvatarLipSyncContextに転送します。

RecorderクラスはIProcessorインターフェースを実装したクラスに、読み取りオーディオバッファを転送できます。

カスタムオーディオプロセッサーの作成方法の詳細は、以下のページをご覧ください。Photon Voice - よくある質問

Voice接続時にオーディオプロセッサーを登録するため、VoiceComponentを継承したAudioLipSyncConnectorを、Recorderと同じゲームオブジェクトに追加します。

これによって、PhotonVoiceCreatedとPhotonVoiceRemovedコールバックを受け取り、Voice接続時にポストプロセッサーを追加できるようになります。

接続されるポストプロセッサーは、IProcessor<float>かIProcessor<short>を実装したAvatarAudioProcessorです。

プレイヤー接続時、MetaAvatarSyncコンポーネントは、RecorderにあるAudioLipSyncConnectorを検索して、lipSyncContextフィールドにプロセッサーを設定します。

そうすることで、RecorderからAvatarAudioProcessorのProcessコールバックが呼び出されるたびに、受け取ったオーディオバッファに対してOvrAvatarLipSyncContextのProcessAudioSamplesが呼び出され、アバターモデルでリップシンクの計算が行われます。

リップシンクはアバターのボディー情報と一緒にストリームされて、アバターのRecordStreamData_AutoBufferでキャプチャされた時に、MetaAvatarSyncのLateUpdate内で行われます。

依存関係

- Meta Avatars SDK (com.meta.xr.sdk.avatars) 24.1.1 + Sample scene

- Meta Avatars SDK Sample Assets (com.meta.xr.sdk.avatars.sample.assets) 24.1.1

- Meta XR Core SDK (com.meta.xr.sdk.core) 62.0.0

- Meta XR Platform SDK (com.meta.xr.sdk.platform) 62.0.0

- Photon Voice SDK

- MetaOVRHandsSynchronization addon

デモ

デモシーンはAssets\Photon\FusionAddons\MetaAvatar\Demo\Scenes\フォルダーにあります。

ダウンロード

このアドオンの最新バージョンは、Industries アドオンのプロジェクトに含まれています。

対応するトポロジー

- 共有モード

更新履歴

- Version 2.0.1:

- Compatibility with packaged Meta avatar com.meta.xr.sdk.avatars 24.1.1

- Version 2.0.0: First release