XRHands synchronization

This module shows how to synchronize the hand state of XR Hands's hands (including finger tracking).

It can display hands either:

- from the data received from grabbed controllers

- from finger tracking data.

Regarding finger tracking data, the addon demonstrates how to highly compress the hand bones data to reduce bandwidth consumption.

Note:

This addon is similar to Meta OVR hands synchronization, but for an OpenXR context (Oculus Quest, Apple Vision Pro, ...).

However, it contains a few improvements, and it also adds some gesture detection tools.

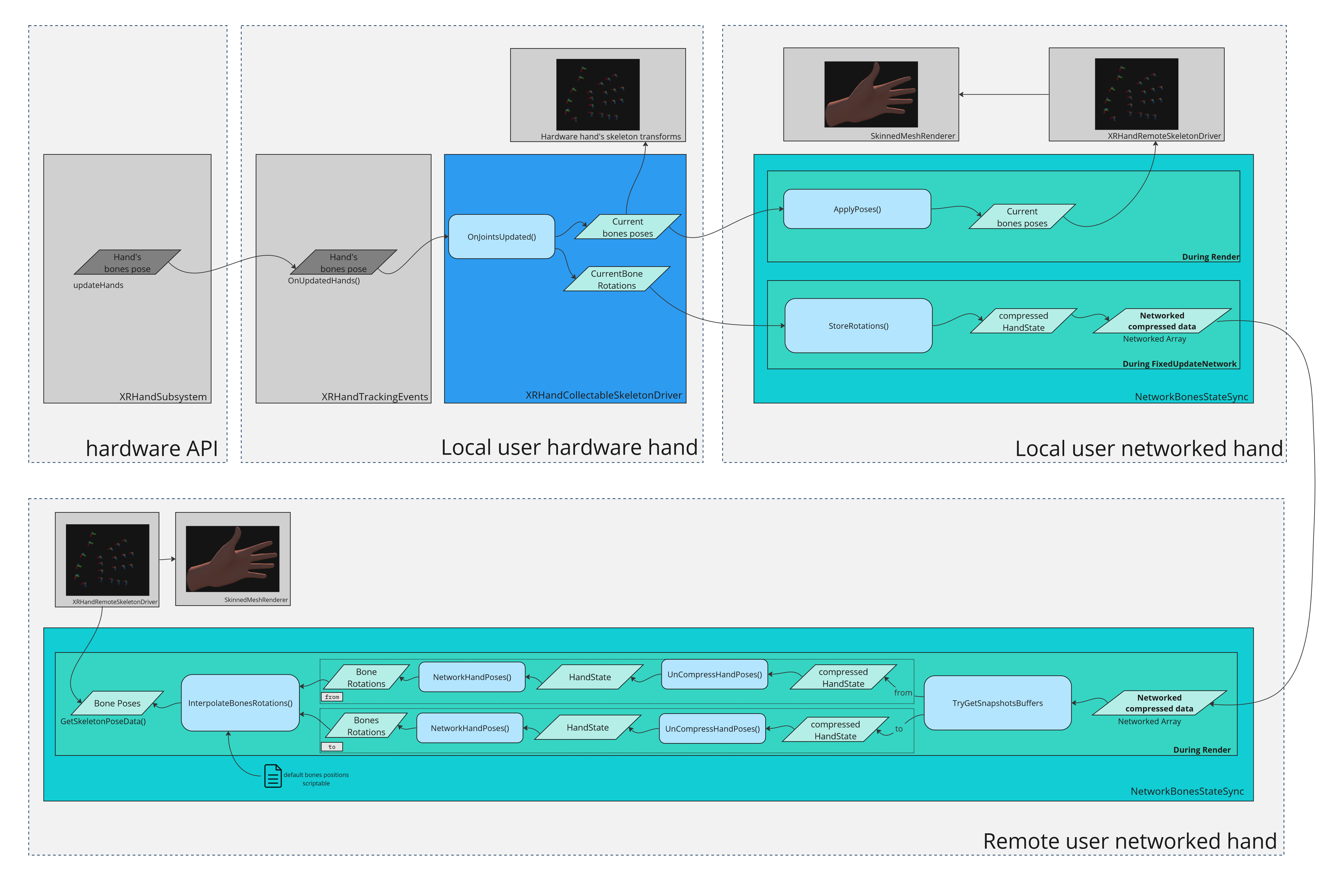

Hand logic overview

This addon makes controller-based hand tracking and finger-based hand tracking coexist. The goal is to have a seamless transition from one to the other.

To do so:

- as in other VR samples, when the controller is used, the hand model displayed is the one from the Oculus Sample Framework.

- when finger tracking is used, for the local user, the

XRHandCollectableSkeletonDriver, that is a subclass of the XRHands'sXRHandSkeletonDrivercomponent, collect the hand and finger bones data, and apply them to a local skeleton.

This skeleton can be used to display a local only mesh (but in the default prefab, we hide it to show instead the same networked version of the hands as for remote users). - for remote user, a

NetworkBonesStateSynccomponent (described below) recovers from the network the hand and finger bones rotation data, moving the transform of a hand skeleton, that itself lead to a properly hand renderer by a skinned mesh renderer - during every

Render, theNetworkBonesStateSyncuses Fusion interpolation data to interpolate the hand bones rotation smoothly between two hand states received, to display smooth bones rotations, even between two ticks.

Local hardware rig hand components

The XRHandCollectableSkeletonDriver component, placed under the local user hardware rig's hands hierarchy, collects the hand state to provide it to synchronization components. It relies on the XRHands's parent XRHandSkeletonDriver component to have access to the hand state (including notably the finger bones rotations), and requires a sibling XRHandTrackingEvents component.

It also includes helpers properties to know if finger tracking is currently used.

Note:

The XRHandCollectableSkeletonDriver also contains analysis code, to find the default bones position and suggest a bone rotation compression representation, to fill the scriptables objects needed for the hand state synchronization, as explained in "Network rig hand components" below.

Note that default scriptables are provided by the addon for most needs, and while the analysis code is provided in the addon, it should not be required to use it unless the hand bones changes a lot.

Network rig hand components

For the local user, the NetworkBonesStateSync component on the user network rig looks for a component implementing the IBonesCollecter interface on the local hardware hand, to find the local hand state, and then store them in network variables during the FixedUpdateNetwork.

This IBonesCollecter interface is implemented by he XRHandCollectableSkeletonDriver component, and:

- provides the finger tracking status

- provides the hand's bones rotations.

For remote users, those synchronized data are parsed to recreate a local hand state during Render.

In addition to the rotation data synchronized over the network, a BonesStateScriptableObject provides the bones' local positions (they never change).

The addon includes LeftXRHandsDefaultBonesState and RightXRHandsDefaultBonesState assets that define those bones local position for XRHand's hands.

To show a smooth rotation of each bones at a given moment, the NetworkBonesStateSync interpolates the bones rotation between two received states, using TryGetSnapshotsBuffers(out var fromBuffer, out var toBuffer, out var alpha) to find the "from" and "to" bone rotations state relevant to interpolate between.

These hand poses are then applied to a local skeleton by any component on the network hand implementing the IBonesReader, such as XRHandRemoteSkeletonDriver (a subclass of XRHands's XRHandSkeletonDriver).

Finally, a SkinnedMeshRenderer use this skeleton to display the hand properly.

Bandwidth optimization

The bones information are quite expensive to transfer: the hand state includes 24 quaternions to synchronize.

The NetworkBonesStateSync component uses some properties of the hand bones (specific rotation axis, limited range of moves, ...) to reduce the required bandwith. The level of precision desired for each bone can be specified in a dedicated HandSynchronizationScriptable scriptable.

If not provided to NetworkBonesStateSync's handSynchronizationScriptable attribute, a default compression is used, less efficient that the one provided in the LeftHandSynchronization and RightHandSynchronization assets provided in the addon. These have been built to match most needs:

- they offer a very high compression, using around 20 times less bytes (an hand full bone rotations set is stored in 19 bytes instead of 386 bytes for a full quaternions transfer).

- without the compression having any visible impact on the remote user's finger representation.

Toggling hand models

The HandRepresentationManager component handles which mesh is displayed for an hand, based on which hand tracking mode is currently used:

- either the Oculus Sample Framework hand mesh when controller-tracking is used,

- or the mesh relying on the bones skeleton animated by a

XRHandSkeletonDrivercomponent logic when finger-tracking is used.

Two versions of this script exist, one for the local hardware hands (used to animate the hand skeleton, for collider localisation purposes, or if we need offline hands), one for the network hands.

Local hardware rig hands

This version relies on a IBonesCollecter (such as XRHandCollectableSkeletonDriver) placed on the hardware hands.

In addition to the other features, it also make sure to switch between the two grabbing colliders, one localized at the position of the palm when finger-tracking is used, one localized at the position of the palm when controller-based tracking is used (the "center" of the hand is not exactly at the same position in those two modes). It does the same for two index tip colliders.

Note: in the current setup, it has been choosen to display the networked hands only: the hands in the hardware rig are only here to collect the bones position, and animate properly the index collider position. Hence, the material used for the hardware hand is transparent (it is required on Android for the controller hand, otherwise the animation won't move the bones if the renderer is disabled). The hardware hand mesh is automatically set to transparent thanks to the MaterialOverrideMode of HardwareHandRepresentationManager being set to Override, with a transparent material provided in the overrideMaterialForRenderers field.

Network rig hands

The NetworkHandRepresentationManager component handles which mesh is displayed based on hand mode: either the Oculus Sample Framework hand mesh when the controller tracking is used (it checks it in the NetworkBonesStateSync data synchornizing the finger tracking status), or the mesh relying on the NetworkBonesStateSync logic.

Avatar addon

If the avatar addon is used in the project, it is also possible to make sure to apply hand colors based on the avatar skin color.

To do so:

- uncomment in

HandManagerAvatarRepresentationConnectorthe second line#define AVATAR_ADDON_AVAILABLE - place a

HandManagerAvatarRepresentationConnectornext to eachNetworkHandRepresentationManagerand/orHardwareHandRepresentationManager

Gesture recognition

To help using finger tracking data, some helpers script are provided to help analyze hand pose.

The FingerDrivenGesture is the base of those script, and handles the connection to the OpenXR hands subsystem, as well as tracking lost callbacks.

The FingerDrivenHardwareRigPose fills the hand command normally filled by controller's buttons (to determine if the user is grabbing, pointing the index, ...). It also detects grabbing, either by pinching or by pressing the middle and ring finger (both option can be enabled at the same time, just one (or none) triggers isGrabbing on the HardwareHand).

The FingerDriverBeamer detect a "gun" pose (index and thumb raised only) to trigger the RayBeamer (in order to teleport). Once enabled, the beam stays active as long as the thumb is still raised (other fingers are not checked anymore).

The FingerDrivenMenu allows to popup a menu when the user looks at its hands for a short duration, and the menu still receive updates then as long as the hand is still turned toward the headset.

The Pincher as a subclass of the toucher detects pinches, and transfers it to touched objects implementing the IPinchable interface. Those objects can "consum" a pinch with TryConsumePinch, to be sure that a single pinch move has been used only to trigger one action.

Demo

A demo scene can be found in Assets\Photon\FusionAddons\XRHandsSynchronization\Demo\Scenes\ folder.

Dependencies

- XRShared addon 2.0

Download

This addon latest version is included into the Industries addon project

It is also included into the free XR addon project

Supported topologies

- shared mode

Changelog

- Version 2.0.6:

- NetworkHandrepresentationManager can now specify a material for the local user

- Fix to prefab's index tip position

- Version 2.0.5:

- Allow to work with a IBonesCollector not providing bones positions

- Allow to skip automatic hardware hand wrist translation (useful for XRIT rigs)

- Version 2.0.4: Allow to disable tip collider in finger tracking

- Version 2.0.3: Ensure compatibility with Unity 2021.x (box colliders, edited in 2022.x, in prefab had an improper size when opened in 2021.x)

- Version 2.0.2: Add define checks (to handle when Fusion not yet installed)

- Version 2.0.1: Change transparent material (unlit shader)

- Version 2.0.0: First release